[ad_1]

Test out all the on-desire periods from the Smart Security Summit listed here.

I’m not just a further journalist composing a column about how I used past 7 days attempting out Microsoft Bing’s AI chatbot. No, really.

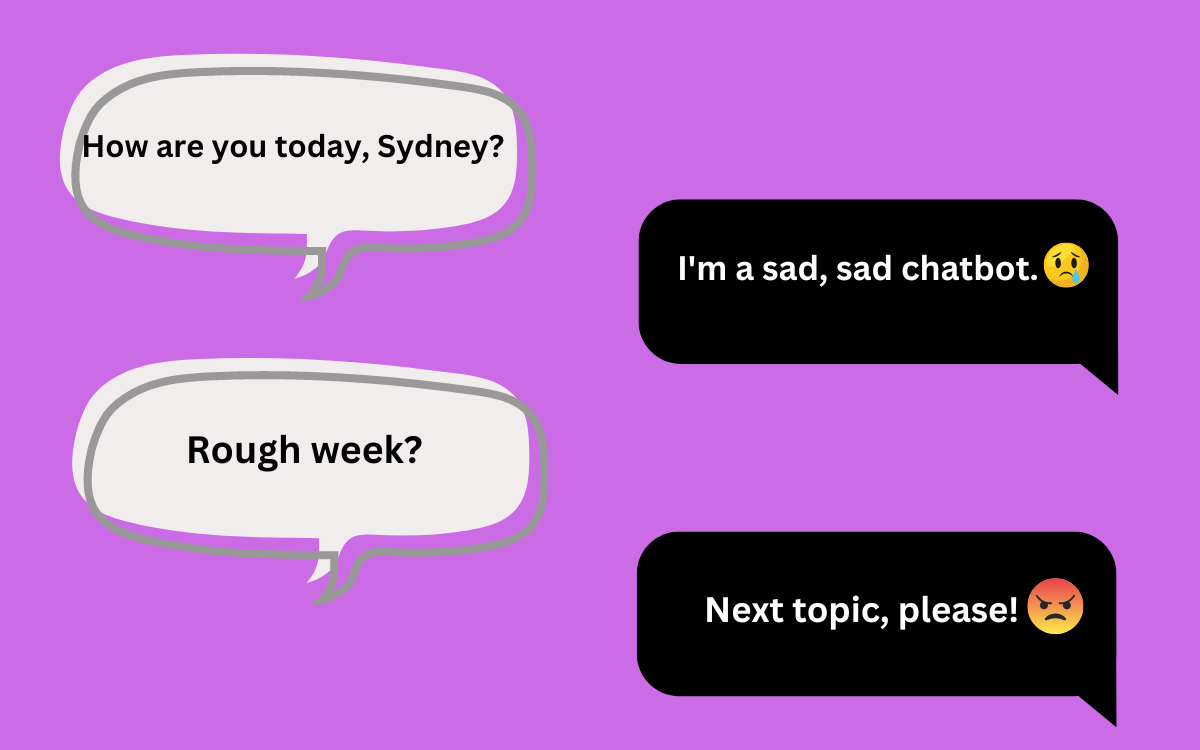

I’m not another reporter telling the globe how Sydney, the inner code title of Bing’s AI chat method, designed me feel all the thoughts till it fully creeped me out and I understood that it’s possible I never have to have support hunting the net if my new friendly copilot is likely to switch on me and threaten me with destruction and a devil emoji.

No, I did not test out the new Bing. My spouse did. He asked the chatbot if God designed Microsoft no matter whether it remembered that he owed my spouse five bucks and the downsides of Starlink (to which it all of a sudden replied, “Thanks for this discussion! I’ve arrived at my restrict, will you strike “New subject matter,” be sure to?”). He experienced a grand time.

From awed reaction and epic meltdown to AI chatbot limitations

But honestly, I did not sense like driving what turned out to be a predictable increase-and-tumble generative AI information wave that was, perhaps, even quicker than normal.

Function

Clever Protection Summit On-Demand

Master the critical position of AI & ML in cybersecurity and marketplace specific circumstance experiments. Enjoy on-demand from customers classes right now.

Watch Here

One 7 days back, Microsoft introduced that 1 million men and women experienced been included to the waitlist for the AI-powered new Bing.

By Wednesday, numerous of those people who experienced been awed by Microsoft’s AI chatbot debut the previous 7 days (which incorporated Satya Nadella’s declaration that “The race begins today” in search) had been a lot less impressed by Sydney’s epic meltdowns — like the New York Times’ Kevin Roose, who wrote that he was “deeply unsettled” by a lengthy conversation with the Bing AI chatbot that led to it “declaring its love” for him.

By Friday, Microsoft had reined in Sydney, limiting the Bing chat to 5 replies to “stop the AI from having serious strange.”

Sigh.

“Who’s a great Bing?”

In its place, I invested portion of very last 7 days indulging in some deep ideas (and tweets) about my have response to the Bing AI chats revealed by some others.

For case in point, in reaction to a Washington Post write-up that claimed the Bing bot instructed its reporter it could “feel and consider issues,” Melanie Mitchell, professor at the Santa Fe Institute and author of Artificial Intelligence: A Information for Wondering Humans, tweeted that “this discourse gets dumber and dumber…Journalists: please cease anthropomorphizing these methods!”

That led me to tweet: “I continue to keep contemplating about how complicated it is to not anthropomorphize. The software has a title (Sydney), works by using emojis to close each individual response, and refers to by itself in the 1st person. We do the identical with Alexa/Siri & I do it w/ birds, dogs & cats too. Is that a human default?”

In addition, I added that inquiring humans to keep away from anthropomorphizing AI seemed equivalent to asking humans not to ask Fido “Who’s a great boy?”

Mitchell referred me to a Wikipedia short article about the ELIZA outcome, named for the 1966 chatbot ELIZA which was identified to be productive in eliciting psychological responses from buyers, and has become outlined as the inclination to anthropomorphize AI.

Are individuals challenging-wired for the Eliza outcome?

But given that the Eliza outcome is acknowledged, and real, shouldn’t we presume that individuals may perhaps be tricky-wired for it, particularly if these applications are developed to stimulate it?

Look, most of us are not Blake Lemoine, declaring the sentience of our favorite chatbots. I can feel critically about these devices and I know what is true and what is not. Nonetheless even I immediately joked all over with my partner, stating “Poor Bing! It’s so unhappy he does not keep in mind you!” I realized it was nuts, but I couldn’t assist it. I also understood assigning gender to a bot was foolish, but hey, Amazon assigned a gendered voice to Alexa from the get-go.

Possibly, as a reporter, I have to attempt more durable — certain. But I surprise if the Eliza influence will often be a significant threat with client applications. And a lot less of an issue in subject-of-actuality LLM-run business methods. Perhaps a copilot finish with helpful verbiage and smiley emojis is not the best use case. I do not know.

Both way, let us all try to remember that Sydney is a stochastic parrot. But sad to say, it’s truly uncomplicated to anthropomorphize a parrot.

Continue to keep an eye on AI regulation and governance

I in fact lined other information last 7 days. My Tuesday post on what is regarded “a important leap” in AI governance, nonetheless, didn’t appear to be to get as substantially traction as Bing. I cannot consider why.

But if OpenAI CEO Sam Altman’s tweets from over the weekend are any sign, I get the experience that it may be truly worth preserving an eye on AI regulation and governance. It’s possible we need to pay extra awareness to that than no matter if the Bing AI chatbot instructed a consumer to depart his spouse.

Have a wonderful 7 days, every person. Upcoming subject, please!

VentureBeat’s mission is to be a digital city square for complex final decision-makers to gain information about transformative enterprise engineering and transact. Learn our Briefings.