[ad_1]

Join top executives in San Francisco on July 11-12, to hear how leaders are integrating and optimizing AI investments for success. Learn More

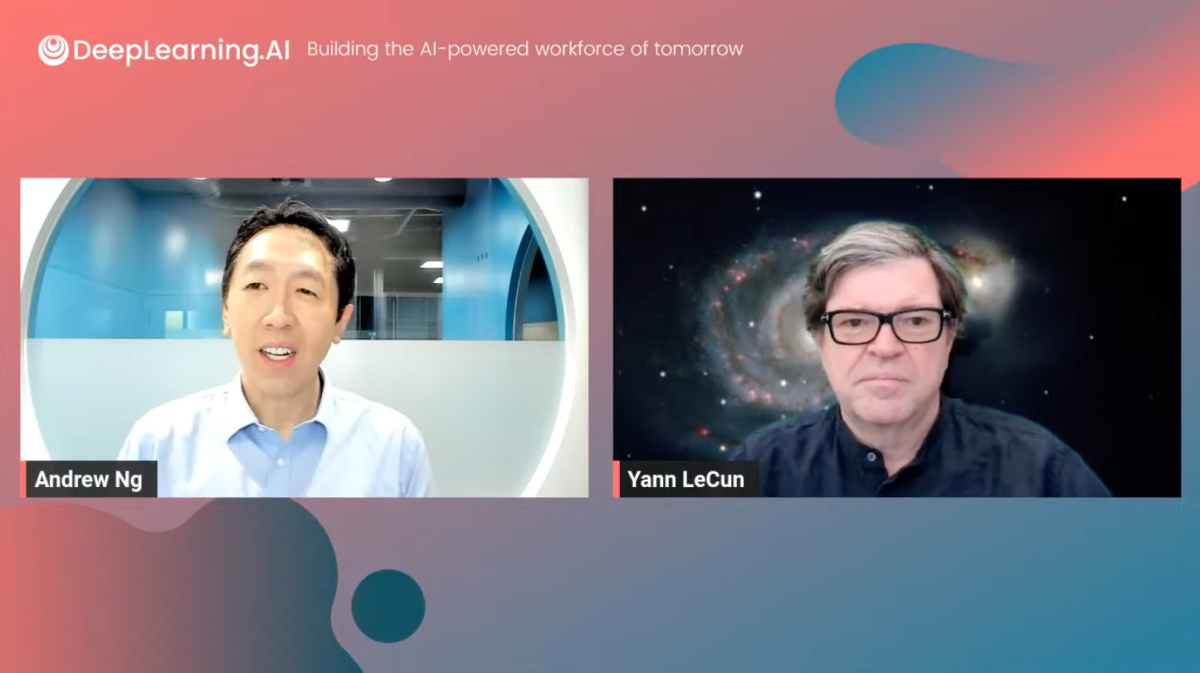

Two prominent figures in the artificial intelligence industry, Yann LeCun, the chief AI scientist at Meta, and Andrew Ng, the founder of Deeplearning.AI, argued against a proposed pause on the development of powerful AI systems in an online discussion on Friday.

The discussion, titled “Why the 6-Month AI Pause Is a Bad Idea,” was hosted on YouTube and drew thousands of viewers.

During the event, LeCun and Ng challenged an open letter that was signed by hundreds of artificial intelligence experts, tech entrepreneurs and scientists last month, calling for a moratorium of at least six months on the training of AI systems more advanced than GPT-4, a text-generating program that can produce realistic and coherent replies to almost any question or topic.

“We have thought at length about this six-month moratorium proposal and felt it was an important enough topic — I think it would actually cause significant harm if the government implemented it — that Yann and I felt like we wanted to chat with you here about it today,” Mr. Ng said in his opening remarks.

Event

Transform 2023

Join us in San Francisco on July 11-12, where top executives will share how they have integrated and optimized AI investments for success and avoided common pitfalls.

Register Now

Ng first explained that the field of artificial intelligence had seen remarkable advances in recent decades, especially in the last few years. Deep learning techniques enabled the creation of generative AI systems that can produce realistic texts, images and sounds, such as ChatGPT, LLaMa, Midjourney, Stable Diffusion and Dall-E. These systems raised hopes for new applications and possibilities, but also concerns about their potential harms and risks.

Some of these concerns were related to the present and near future, such as fairness, bias and social economic displacement. Others were more speculative and distant, such as the emergence of artificial general intelligence (AGI) and its possible malicious or unintended consequences.

“There are probably several motivations from the various signatories of that letter,” said LeCun in his opening remarks. “Some of them are, perhaps on one extreme, worried about AGI being turned on and then eliminating humanity on short notice. I think few people really believe in this kind of scenario, or believe it’s a definite threat that cannot be stopped.”

“Then there are people who are more reasonable, who think that there is real potential harm and danger that needs to be dealt with — and I agree with them,” he continued. “There are a lot of issues with making AI systems controllable, and making them factual, if they’re supposed to provide information, etc., and making them non-toxic. There is a bit of a lack of imagination in the sense of, it’s not like future AI systems will be designed on the same blueprint as current auto-regressive LLMs like ChatGPT and GPT-4 or other systems before them like Galactica or Bard or whatever. I think there’s going to be new ideas that are gonna make those systems much more controllable.”

Growing debate over how to regulate AI

The online event was held amid a growing debate over how to regulate new LLMs that can produce realistic texts on almost any topic. These models, which are based on deep learning and trained on massive amounts of online data, have raised concerns about their potential for misuse and harm. The debate escalated three weeks ago, when OpenAI released GPT-4, its latest and most powerful model.

In their discussion, Mr. Ng and Mr. LeCun agreed that some regulation was necessary, but not at the expense of research and innovation. They argued that a pause on developing or deploying these models was unrealistic and counterproductive. They also called for more collaboration and transparency among researchers, governments and corporations to ensure the ethical and responsible use of these models.

“My first reaction to [the letter] is that calling for a delay in research and development smacks me of a new wave of obscurantism,” said LeCun. “Why slow down the progress of knowledge and science? Then there is the question of products…I’m all for regulating products that get in the hands of people. I don’t see the point of regulating research and development. I don’t think that serves any purpose other than reducing the knowledge that we could use to actually make technology better, safer.”

“While AI today has some risks of harm, like bias, fairness, concentration of power — those are real issues — I think it’s also creating tremendous value. I think with deep learning over the last 10 years, and even in the last year or so, the number of generative AI ideas and how to use it for education or healthcare, or responsive coaching, is incredibly exciting, and the value so many people are creating to help other people using AI.”

“I think as amazing as GPT-4 is today, building it even better than GPT-4 will help all of these applications and help a lot of people,” he added. “So pausing that progress seems like it would create a lot of harm and slow down the creation of very valuable stuff that will help a lot of people.”

Watch the full video of the conversation on YouTube.

VentureBeat’s mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Discover our Briefings.